old programmers who think that they could do stuff better. In reality we never

have the time to do anything more than what we are paid to do, but we can still

dream....

We were discussing the move to multi-core CPUs and how software development was

not tracking that development. As experienced programmers we were both convinced

that multi-threaded programming was not something that the average programmer

can deal with reliably. All the articles being published by Intel etc seemed to

deal with the idea that making it easier to write multi-threaded applications

would some how boost the acceptance of their new CPU designs.

My view is that there are only a few tasks that really need to be written for a

multi-threaded environment. Operating systems, virtual machines and some intensive

graphics processing are obvious candidates. The average business application

should not need this extra complication.

A multi-threaded application is inherently non-deterministic. This has some

important implications. For example, just because it worked once in test does not

mean that it will forever work correctly. A change in the load or other timing

and the behaviour can change radically. A correct test is now just a statement

that it is now known to be possible for the application to work correctly. It

does not say much about the probability of it always working correctly.

In my career, most of the really hard to track down bugs have been the result of

threading issues. In one case a programmer thought that he could improve the

performance of the application by using a global variable for a loop counter. It

took months before the timing caused the program to crash - the crash was caused

by the first thread being interrupted part way through the loop, and the second

thread leaving the loop counter past the end of the array the first thread was

indexing.

In other cases an X Windows GUI program was "improved" by adding an

event dispatching loop deep inside an event handler, presumably to prevent the

program from becoming non-responsive during the lengthy calculation. Occasionally

a fast typist would cause the program to re-enter some functions while they

were still being used for the previous event - much chaos would then ensue.

In yet another case an RPC server process that used a shared memory area for its

data did not have sufficient semaphore locks to prevent the occasional collision

when updating the data values. The sequence of events to trigger this situation

was spread out over several months, so you can imagine how hard it was to track

that one down - good logging is essential for this sort of investigation.

The lesson to take from these examples is that even good programmers find it

difficult to design an algorithm involving multiple threads. For most people it

is hard enough just to cover all the alternative cases. Adding in the possibility

that another thread will change stuff at some random point in the chain is

generally not something that can be easily incorporated.

Agents

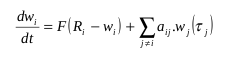

One possible approach to efficiently using multi-core machines without addingthe complication of multi-threading is to use an "agent" based approach. The

idea is that the system is constructed from high level objects that only interact

using one way messages. The code within the agent becomes standard event handling

code without the need for more than one thread - each message is processed

to completion before the next is pulled from the queue.

Of course the virtual machine that runs this system is multi-threaded, but the

code for each agent is not. This means that the application programmer's job

becomes much simpler (at the expense of the one off effort to create the

virtual machine.)

This work is licensed under a Creative Commons Attribution-ShareAlike 4.0 International License.